How to restore a dedicated server using Rescue mode

In cases when OS is affected by critical system errors or server is unable to boot properly, the Portal feature called Rescue mode may come in handy.

This feature is suitable for recovery of most Linux-based operating systems.

How to use Rescue mode

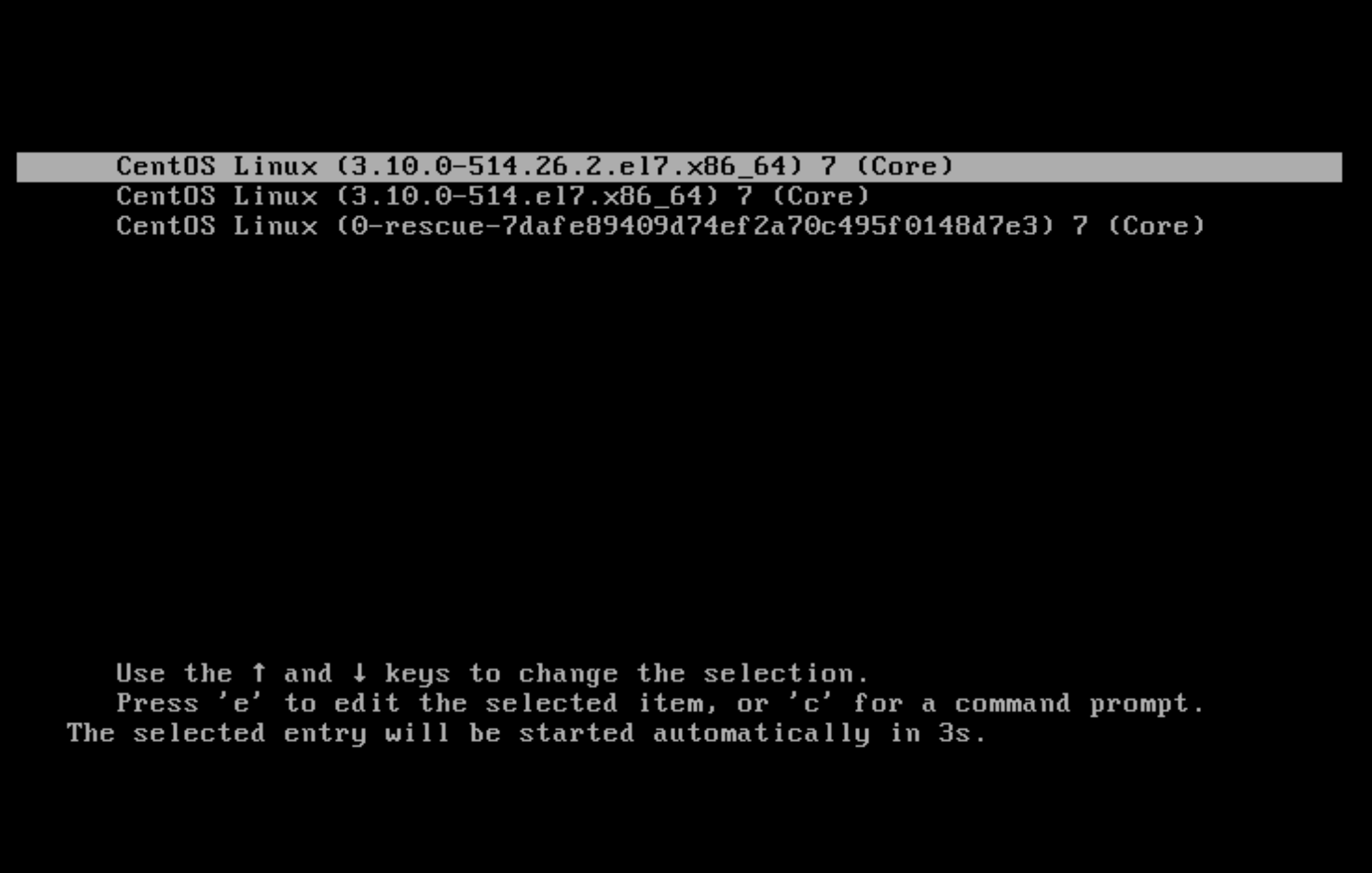

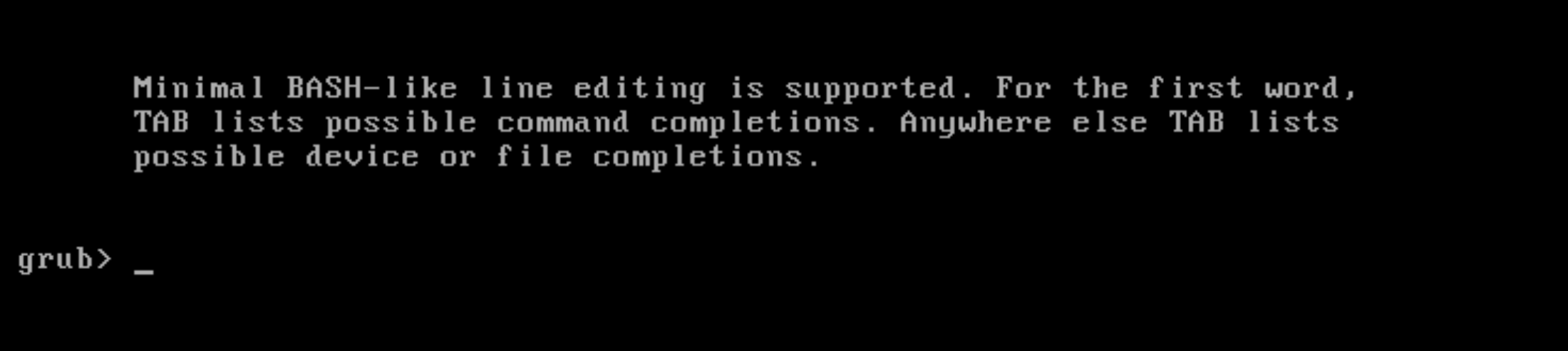

Imagine that something went wrong with the OS configuration, for example, the GRUB loader configuration file is corrupted, and server shows a black screen and unable to boot:

This issue might be solved in a number of ways, one of which is using Rescue mode.

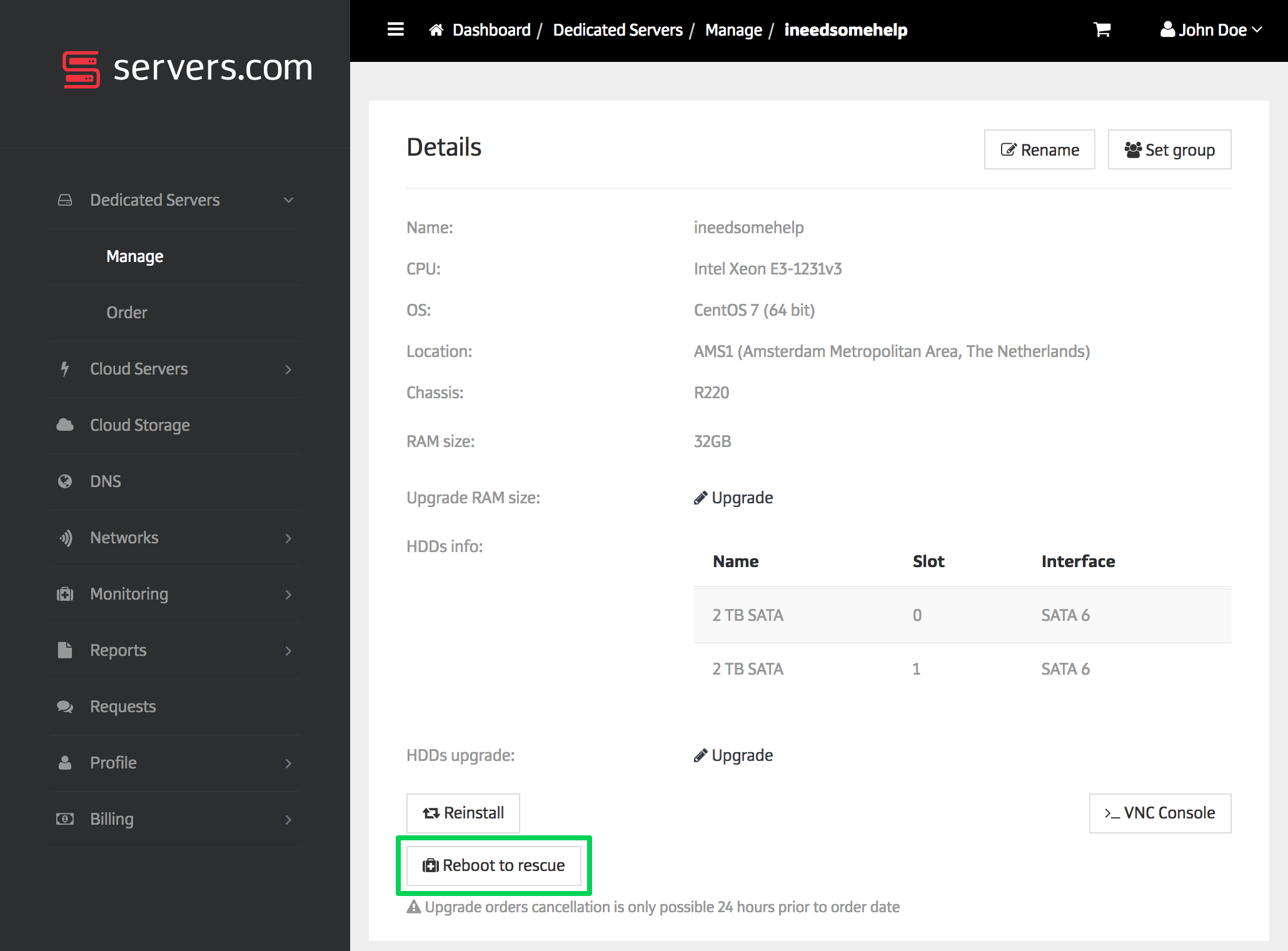

Press the "Reboot to rescue" button in the Customer Portal and wait for an email containing temporary login credentials (this usually takes up to 10 minutes).

Use credentials to access the server via SSH:

$ ssh 123.45.67.89 -l root

Warning: Permanently added '123.45.67.89' (RSA) to the list of known hosts.

root@123.45.67.89's password:

* Welcome to DMGMTSrvInit 1.0 x86_64 [based on CentOS 6 x86_64]

Dell OpenManage Deployment Toolkit:

- RAID: raidcfg

- DRAC: racadm4, racadm5, idracadm7

- BIOS: syscfg

- SYSTEM: omconfig, omreport, dsu

Additional utils:

- RAID: mdadm

- FS: lvm, xfs, jfs, e2fs, nfs, ntfs, dosfs, parted, fdisk, cfdisk, sfdisk, grub

- EDITORS: vi, nano, joe

- SYSTEM: dmidecode, smartmontools, testdisk, hddtemp, lshw, memtester, ipmitool

- NETWORK: ethtool, tcpdump, mtr, traceroute, tcptraceroute, nmap, dstat

- TOOLS: rsync, lftp, curl, wget, nc, screen, mc, chkrootkit

You can check speedtest files in your browser, url: http://<server ip>/

The list of available useful commands is displayed upon login.

Now try to recover the GRUB configuration file.

Get the list of available disks and partitions using the lsblk command:

[root@dmgmtsrvinit.pxe ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdb 8:16 0 1.8T 0 disk

├─sdb1 8:17 0 1M 0 part

├─sdb2 8:18 0 500M 0 part

│ └─md127 9:127 0 500M 0 raid1

└─sdb3 8:19 0 1.8T 0 part

└─md126 9:126 0 1.8T 0 raid1

├─C1500302784I0-home (dm-0) 253:0 0 1.8T 0 lvm

├─C1500302784I0-rootfs (dm-1) 253:1 0 10G 0 lvm

└─C1500302784I0-swap (dm-2) 253:2 0 2G 0 lvm

sda 8:0 0 1.8T 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 500M 0 part

│ └─md127 9:127 0 500M 0 raid1

└─sda3 8:3 0 1.8T 0 part

└─md126 9:126 0 1.8T 0 raid1

├─C1500302784I0-home (dm-0) 253:0 0 1.8T 0 lvm

├─C1500302784I0-rootfs (dm-1) 253:1 0 10G 0 lvm

└─C1500302784I0-swap (dm-2) 253:2 0 2G 0 lvm

Seems like disk /dev/mapper/C1500302784I0-rootfs contains the root partition and disk /dev/md127 contains /boot

Mount these partitions:

mkdir /mnt/root

mount /dev/mapper/C1500302784I0-rootfs /mnt/root

mount /dev/md127 /mnt/root/bootYou'll also need to mount several other partitions in order to make the grub2-mkconfig command work properly:

mount -o bind /dev /mnt/root/dev

mount -o bind /sys /mnt/root/sys

mount -t proc none /mnt/root/procChange the root directory using the chroot command:

chroot /mnt/rootRun the grub2-mkconfig command, which will generate new GRUB configuration file:

[root@dmgmtsrvinit.pxe /]# grub2-mkconfig -o /boot/grub2/grub.cfg

Generating grub configuration file ...

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

Found linux image: /boot/vmlinuz-3.10.0-514.26.2.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-514.26.2.el7.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-514.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-514.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-7dafe89409d74ef2a70c495f0148d7e3

Found initrd image: /boot/initramfs-0-rescue-7dafe89409d74ef2a70c495f0148d7e3.img

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

done

The command executed successfully, apart from a few warning messages.

Exit the chroot and shut down the server in a proper way:

[root@dmgmtsrvinit.pxe /]# exit

[root@dmgmtsrvinit.pxe ~]# shutdown -h now

Broadcast message from root@dmgmtsrvinit.pxe

(/dev/pts/0) at 18:29 ...

The system is going down for halt NOW!

[root@dmgmtsrvinit.pxe ~]# Connection to 123.45.67.89 closed by remote host.

Connection to 123.45.67.89 closed.

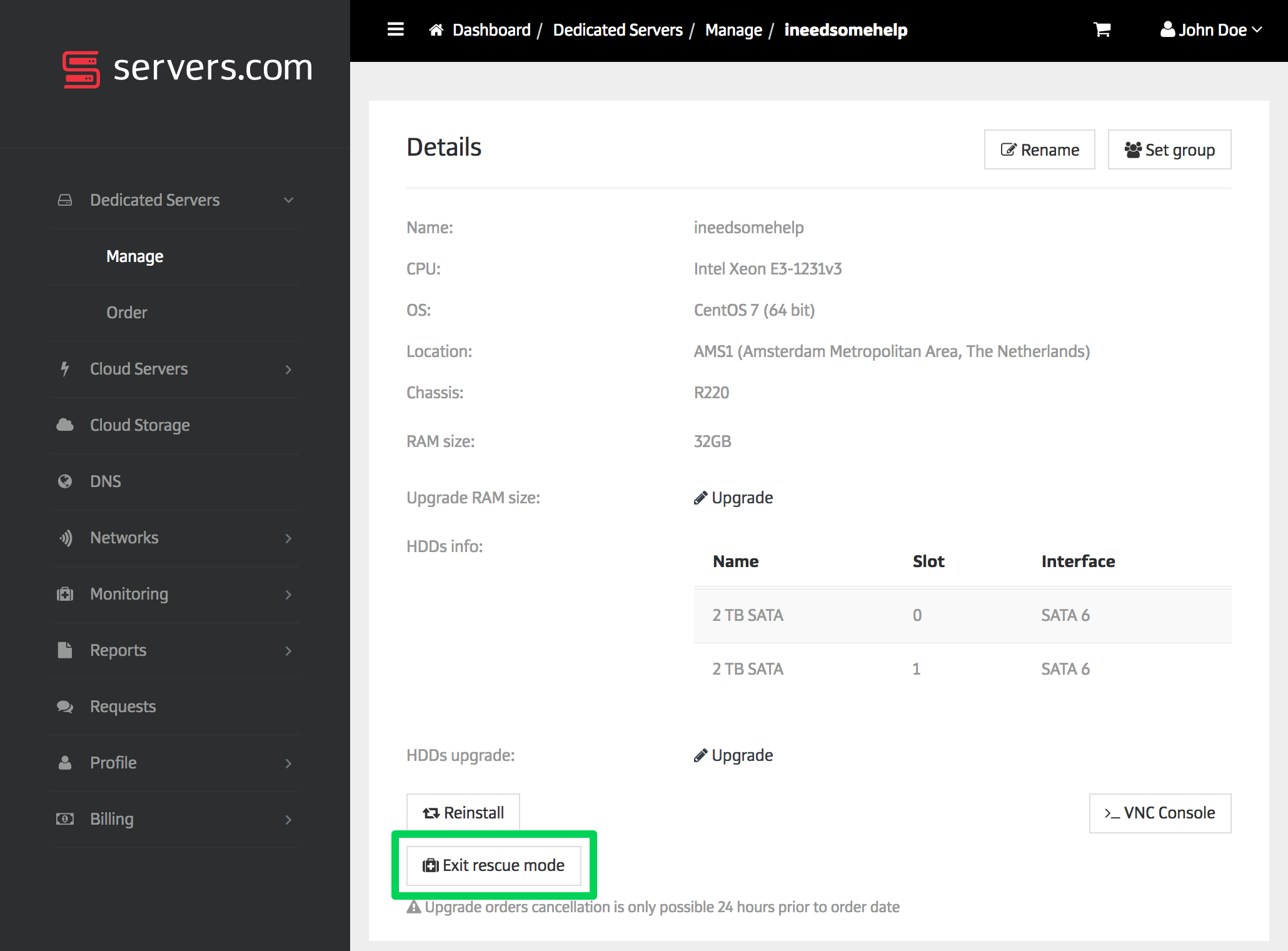

Exiting Rescue mode

As soon as recovery works have been finished, press the "Exit rescue mode" button in the Customer Portal. The server will exit Rescue mode and boot normally into OS installed on its disks.

The loader is working again!