The first session I sat in at GDC this year was given by Stefano Corazza, head of Roblox Studio where he discussed how the industry and Roblox is advancing content creation with generative AI. The session was packed. In fact, I ended up having to stand at the back during the talk, not because I was late (I know, very unlike me) but because it was already full. Which shows just how big the level of interest is in this topic.

Although not all the interest is positive.

I’d go so far as to say that generative AI in gaming is possibly one of the most divisive topics within the gaming industry at the moment.

In a Wired article earlier this year, Patrick Mills, CD Projekt Red’s acting franchise content strategy lead said “I have seen some, frankly, ludicrous claims about stuff that’s supposedly just around the corner. I saw people suggesting that AI would be able to build out Night City, for example. I think we’re a way off from that.”

Then you have Allen Adham chief design officer at Blizzard saying “prepare to be amazed. We are on the brink of a major evolution in how we build and manage our games”.

So, which is it? Are we “on the brink of a major evolution” or still “a way off”?

I can’t be the only one who’s confused by the mixed messaging around generative AI in gaming?

It’s why I decided to try and educate myself a little more on the different schools of thought. Because I’m all heart, I thought I’d share what I found. Starting with a few facts that I collected on my travels around the internet when researching the topic.

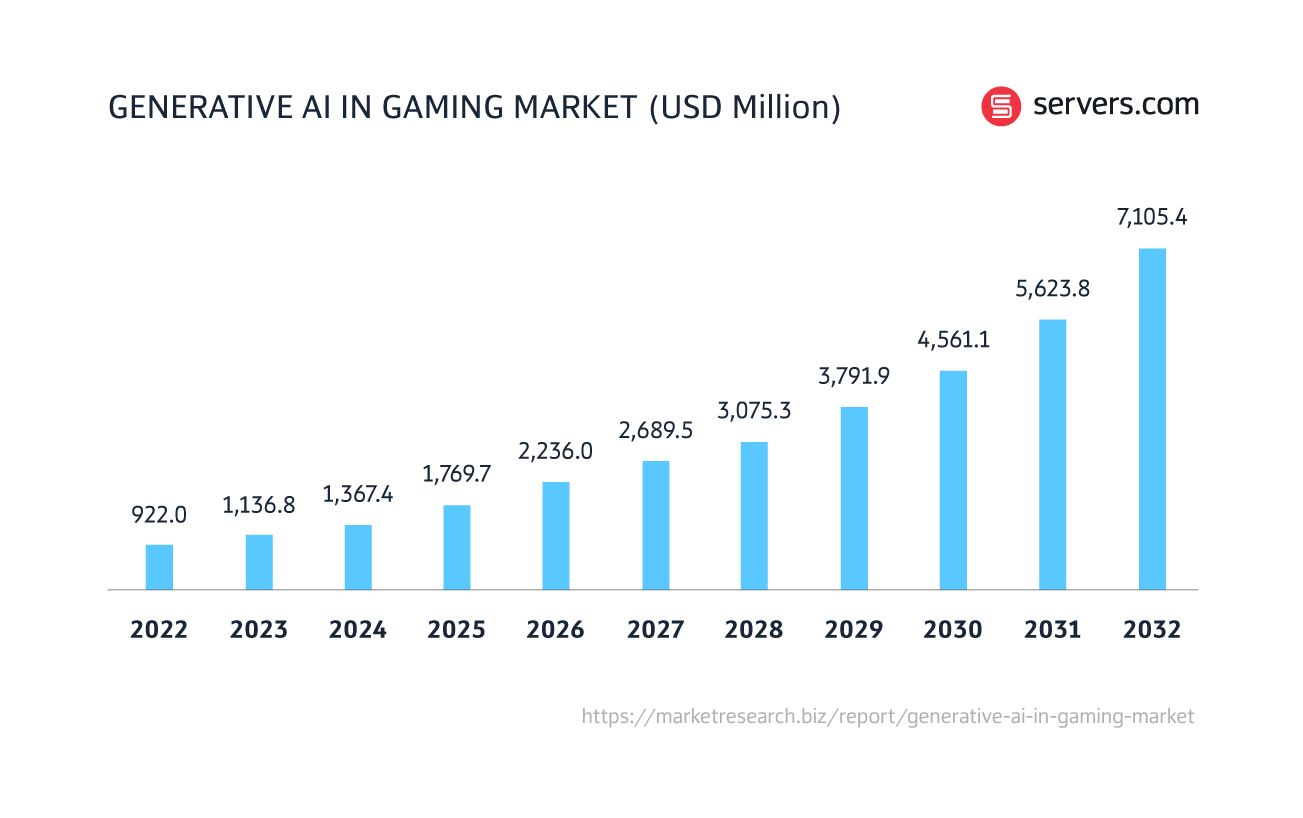

Let’s start with the market size. In 2022, the global market for generative AI in gaming was worth $922 million. That number is expected to grow to $7.1 billion in the next ten years.

That’s pretty impressive growth.

Where’s the growth coming from? Well, a number of companies have stuck their generative AI stake in the ground over the last few months.

Unity announced through a cryptic teaser video that it is working on a suite of generative AI tools for its game development engine, which it says will transform the gamer experience by 10X. What appears to be a beta program for developers of third-party AI art tools for its game engine “aims to open a marketplace for generative AI software”.

Ubisoft’s Ghostwriter also entered the market this year. It’s an AI writing tool that can be used to create NPC “barks” – quick phrases in-game characters say when an event is triggered by a player.

And Roblox launched its generative AI coding tools for its many million-strong creator community, who can use its material generator to simulate lighting and AI code assist, to increase the speed of coding.

Corazza said in his GDC talk, “we’re seeing a new generation of generative AI creating amazing results. Advances in the technology and how it can be used are happening at the speed of weeks”.

Then of course there is generative AI software that has been available for a while, such as image generators Stable Diffusion, Midjourney and DALL-E – all of which launched last year and are already being used by developers. AI creator Allen T developed a simple side-scrolling video game with just OpenAI’s GPT-4 and Midjourney. He’s included detailed steps on how he did it on his X (formerly Twitter) if you’re interested in giving it a go.

Another fact that I stumbled upon is just how expensive generative AI tools can be. CNBC published a fascinating interview with Nick Walton CEO of a startup called Latitude which created an AI Dungeon game that lets players use AI to “create fantastical tales based on their prompts”. As the game became more popular however Latitude faced a problem – “the more people played AI Dungeon, the bigger the bill Latitude has to pay OpenAI”. Eventually the company was paying $200,000 a month on OpenAI and AWS “to keep up with the millions of users queries it needed to process each day”.

To train a large language model such as OpenAI’s GPT-3, analysts and technologists say could cost more than $4 million.

Unity believes it’s found a solution with Barracuda – a package that allows game developers to run neural networks (also known as machine learning) on the Unity Game Engine. Where it gets interesting is that Barracuda runs on all Unity supported platforms, which includes desktop, mobile and consoles. So, it’s a true cross-platform neural network library. And because it can run locally on any of these devices, without the need to maintain servers, it doesn’t cost anything.

But the rest of the AI solutions out there remain costly. For how long? Nobody’s sure. Although, Nvidia CEO Jensen Huang says AI will be “a million times” more efficient in the next decade because of improvements in chips, software and other computer parts.

So, those are the facts. What are the opinions?

This came from Julian Togelius, co-director of NYU Game Innovation Lab when he explained that you can’t just take something that is very hard to control and use it for something like level design. “You can forgive generators that produce a face with wonky ears or some lines of gibberish text. But a broken game level, no matter how magical it looks, is useless”. It will render a game unplayable.

He can’t see how replacing the more established procedural generation method – where data or content is created via an algorithm instead of a human creating it manually – with generative AI will make a big difference. “[procedural generation] works because this content doesn’t really need to function: It doesn’t have functionality constraint”.

It was another quote from Patrick Mills that really summed up this issue with the lack of realism for me – “a fundamental reason why these generative AIs can’t make something like Night City is because these tools are designed to produce specific outcomes. A lot of people seem to be under the impression that these are somehow close to general intelligences. But that’s not how it works. You’d need to custom-build an AI that could build Night City, or open world cities in general.”

This came up during the Roblox talk at GDC following a question from a member of the audience and is present amongst almost any conversation around generative AI in gaming.

Who owns what AI creates? And how do we police what AI uses? And should we?

In an email to employees in May, Activision Blizzard’s CTO Michael Vance warned them not to use Activision Blizzard’s IP with external image generators, showing concerns around loss of confidential company data and intellectual property.

And there have already been multiple publicised cases around AI replication of human actors without proper compensation and default sharing of artwork to train AI without alerting the artists. In response to the latter story which related to Epic Games’ Artstation, CEO Tim Sweeney tweeted, “we’re not locking AI out by default, because that would turn Epic into a ‘You can’t make AI’ gatekeeper by default and prohibit uses that would fall under copyright law’s fair use rules”.

Perhaps one of the most high-profile stories has been Getty Images accusing Stable Diffusion of taking around 12 million images from its photo database “without permission ... or compensation ... as part of its efforts to build a competing business”.

Will we see more regulations introduced around generative AI? Sean Kane, co-chair of the Interactive Entertainment Group at Frankfurt Kurnit believes “we’re going to have an answer relatively soon, I think, but it’s still a little nebulous”.

One to keep an eye on then.

So many of the conversations around generative AI are accompanied by anxiety and concern for the future of humans in game development.

Chris Lee, head of immersive technologies at Amazon Web Services is a big believer in generative AI being able to reduce the huge number of man hours it takes to develop enormous open-world games saying, “game developers have never been able to keep up with the demands of our audience”.

One developer said they were able to generate concept art for a single image in an hour using generative AI versus three weeks without it.

These kinds of stories have got people excited about the possibility of generative AI enabling micro studios (1-2 people) to create viable commercial games. Innersloth – a studio of just five employees at the time – made hit game Among Us.

But Unity CEO John Riccitiello believes that, “while these models will eventually produce simple games ... the Flappy Birds of the world, I think the rich and complex things are gonna be very hard for these models to produce,” he says.

It’s why Hidden Door’s Hilary Mason says generative AI “isn’t about cutting humans out of the process entirely. It’s just shifting them from a production role to a directing role. More than that, the role of AI isn’t being looked at as content creation itself. It’s a framework for content creators to create with.”

As Corazza said in his GDC talk, generative AI’s success is down to context and too much of the time we expect AI to give an answer when there’s not enough context given. From his experience, if you give AI three lines of code vs none, the success of generative AI increases by 50%.

To put that in context (pun intended) – in an experiment to create potions using AI which he documented on X (formerly Twitter), Emmanuel de Maistre, co-founder and CEO of Scenario.com stated that he used a dedicated training dataset of 98 images (which is quite large).

But what if you don’t have anywhere near that number of images for training? This is where one of generative AI’s biggest weakness lies. It cannot bridge gaps in missing information.

In his article on ‘The potential and limitations of Generative AI’, Aiden Crawford said, “while AI can create variations on existing content, it struggles to create accurate and realistic content when there is little or no existing information available. This limitation has significant implications for the gaming industry as game developers and designers often rely on creative and imaginative content to create new games and experiences.

In my search for an answer to - are we “on the brink of a major evolution” or still “a ways off” – I kept coming back to Corazza’s point from his GDC talk that “advances in the technology and how it can be used are happening at the speed of weeks”.

There is clearly still a huge amount of work that needs to be done to scale the use of generative AI within different types of games. That includes the cost, the human impact and role, regulations and the gaming server infrastructure. But with advances in the technology happening in weeks, I think it’s safe to assume that generative AI in gaming will look different by the end of this year to how it looks now.

And in terms of further into the future, well, I believe that ultimately, we’ll see a world where AI and humans will work side by side to create unique experiences for gamers much faster than we can do it today.

Will that be the same quantity of game launches that we have today but with more content and fewer incomplete games? Hopefully – we don’t want to see even more Cyberpunk CD Projekt Red incidents.

Or will we see the time to make a game reduced so much that way more game launches of the same quality flood the market, making my Steam wish list even longer and impossible to get through!?

Jarrod Palmer is our gaming industry specialist. He knows the issues our customers face and how best

to help them. He's also a great Warzone player and is the UK office FIFA champion.