Streaming is the lifeblood of modern-day entertainment and communications. But the permeation of streaming is a relatively recent phenomenon. To understand where the streaming industry lies today, we must look back to the 90s and the development of the first streaming protocols.

In this whitepaper we’ll walk through a chronology of the streaming protocols that have paved the way for this multi-billion-dollar industry. We discuss the development of each protocol and the latest developments fueling the streaming industry today.

This whitepaper covers:

Worldwide video-on-demand revenues are forecast to reach $152.2 billion by the end of 2023. Combined with an annual growth rate of 9.52% that means the sector should achieve a market volume of $219 billion by 2027.

One of the largest segments in streaming is Subscription Video Streaming on Demand (SVoD). The SVoD market is projected to achieve revenues of $95.35 billion by the end of 2023, with the number of SVoD users reaching heights of 1.64 billion by 2027.

Likewise, research points towards a surge in ad-supported video-on-demand (AVOD) revenues in the coming years, with the market expected to surpass $31 billion by 2027. The global live streaming market is also growing rapidly, forecast to reach a value of $2.61 billion by 2026.

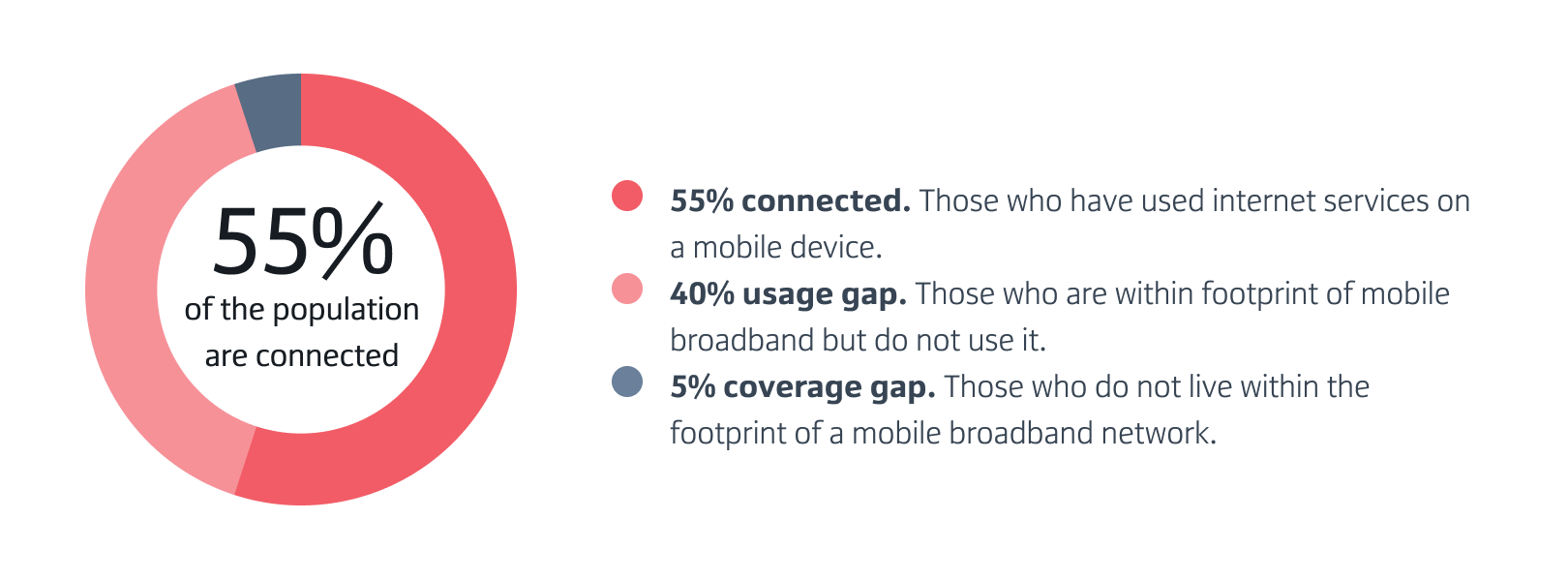

Low barriers to entry, ease of accessibility, and mobile device penetration globally are driving demand for streaming services like never before. Testament to the industry’s success, prominent players in the streaming industry today (think Netflix, YouTube, Twitch, and communications platforms like Skype and Zoom) are all household names.

But none of this would have been possible without the foundational frameworks that allow devices to communicate over the internet. Streaming protocols.

Streaming protocols define the standards and methods for transmitting multimedia content over the internet from one device to another. They function as regulatory blueprints, standardizing the rules for how media files are encoded, transmitted, and decoded, ensuring a seamless delivery and playback experience for the end user.

For example, video and audio streaming protocols segment data into small chunks that can be transmitted more easily. This process relies on codecs (programs that compress or decompress media files) and is how content is delivered and played back to end-users.

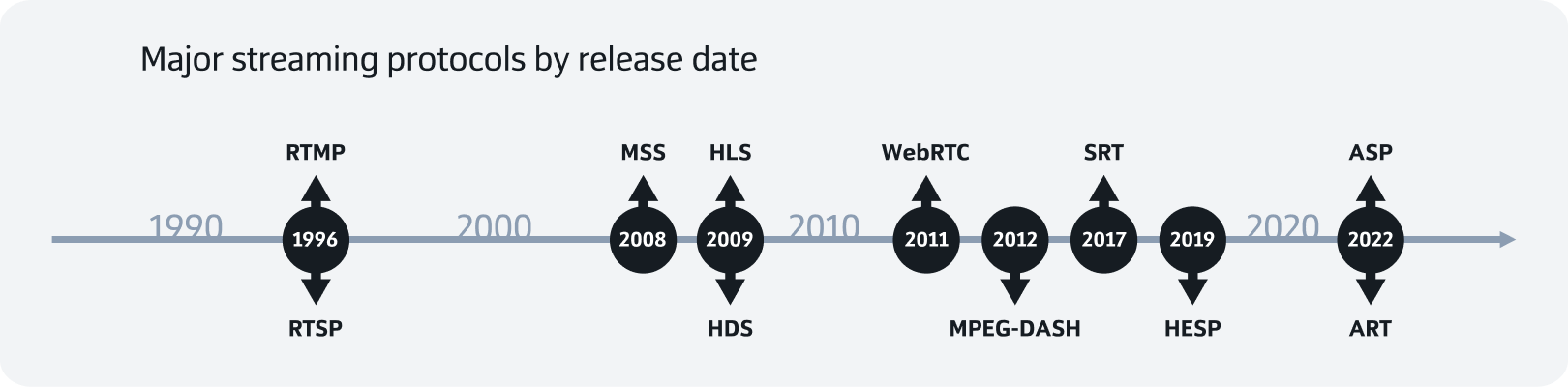

There are many different streaming protocols each with unique strengths and weaknesses. This whitepaper explores each of the major streaming protocols chronologically, piecing together a comprehensive history of this industry's impressive evolution over time.

It will cover the following streaming protocols:

Real Time Messaging Protocol (RTMP)

Real Time Streaming Protocol (RTSP)

HTTP Live Streaming (HLS)

HTTP Dynamic Streaming (HDS)

Microsoft Smooth Streaming (MSS)

Dynamic Adaptive Streaming over HTTP (MPEG-DASH)

Web Real-Time Communications (WebRTC)

Secure Reliable Transport (SRT)

High Efficiency Streaming Protocol (HESP)

Ardent Streaming Protocol (ASP)

Adaptive Reliable Transport (ART)

MRV2 (Media Relay version 2)

|

The first live stream

|

The first live streaming media player |

The first presidential webcast |

|

Today, live streaming is part and parcel of everyday life. But it wasn’t until 1993 that the first live video and audio stream was successfully executed. It all started with some computer scientists-come-musicians. On the 24th of June 1993 their band Severe Tire Damage successfully streamed one of their gigs over a network called multicast backbone (Mbone). |

In 1995 RealNetworks (formerly Progressive Networks) created RealPlayer. This was the first media player capable of live streaming and played host to the very first public live stream/ webcast - a baseball game between the New York Yankees and the Seattle Mariners. By 1997, the company commercialized live video streaming with the launch of their RealVideo program. |

On November 8th 1999, George Washington University held the world’s first ever presidential webcast. Bill Clinton participated in the event entitled “Third Way Politics in the Information Age”, which set a precedent for the popular uptake of live streaming. |

But the most notable development of the decade was the release of two seminal streaming protocols: Real Time Messaging Protocol (RTMP) and Real Time Streaming Protocol (RTSP).

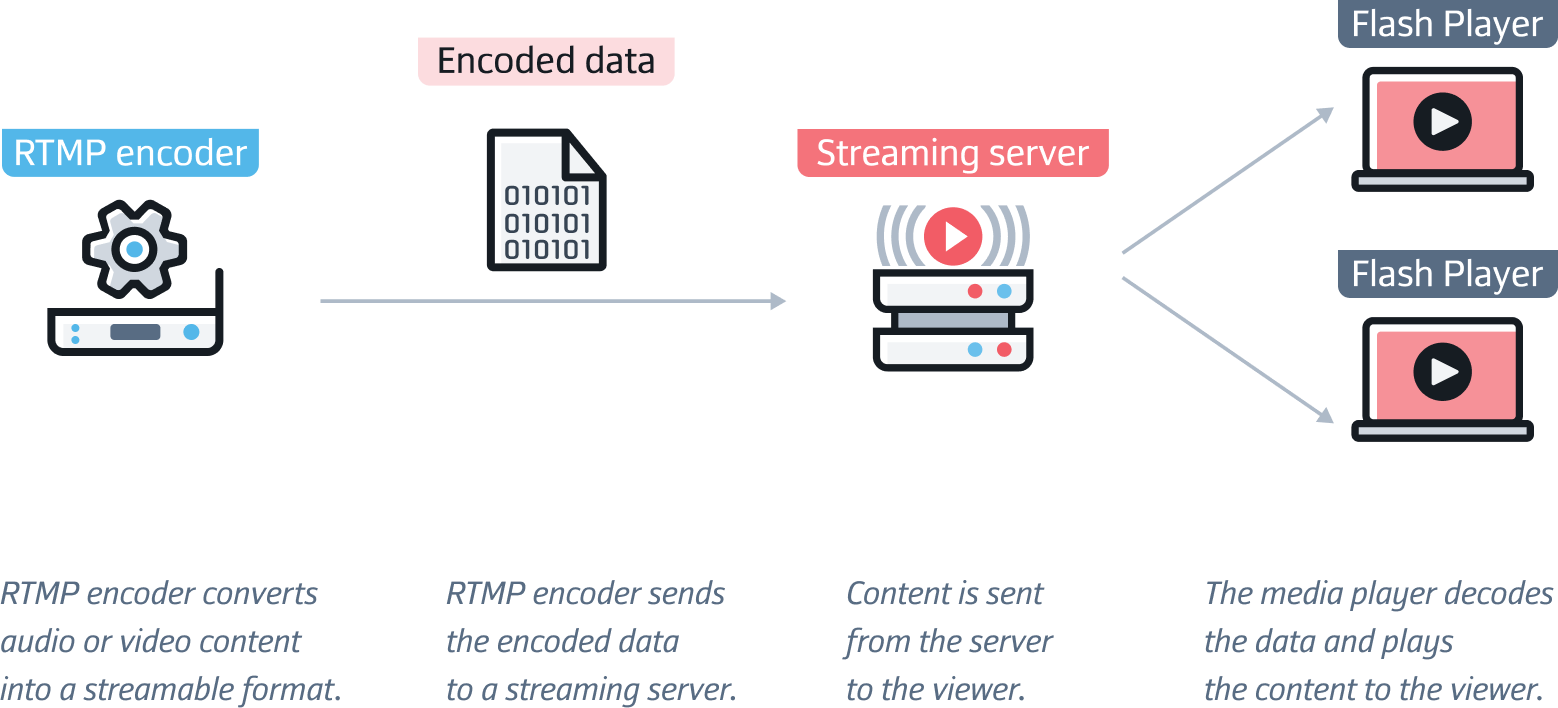

In 1996, Macromedia (the multimedia software company that would later be acquired by Adobe) developed RTMP. The protocol was developed as a Transmission Control Protocol (TCP) technology to connect the original Flash Player to dedicated media servers and facilitate live streaming over the internet.

RTMP sent files in an Adobe flash format called a Small Web Format (SWF) and could deliver audio, video, and text data across devices. The ability to transmit data from a server to a video player in real-time was game changing.

After the discontinuation of Flash in 2020, RTMP fell out of favor for general use. But the protocol is still used due to some notable advantages including low latency and reduced overheads. These days the protocol is used principally for delivering encoded content to media servers, streaming platforms, and social media platforms.

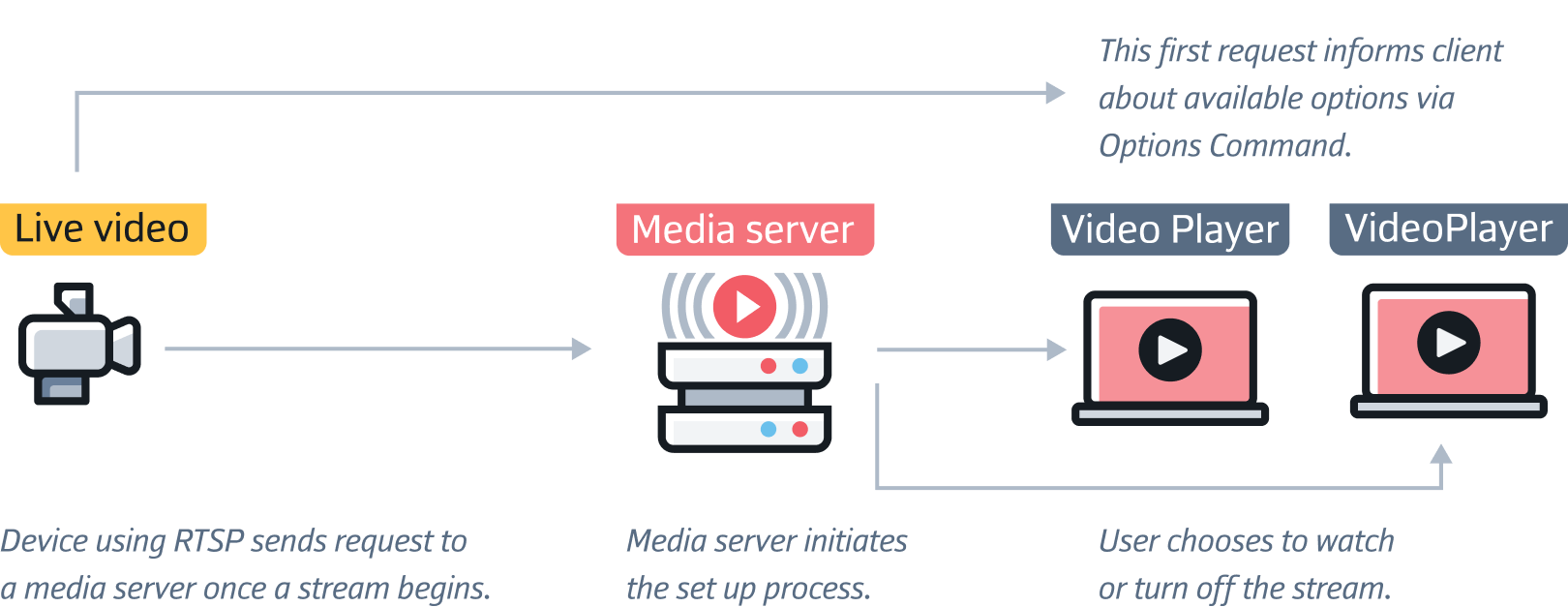

Around the same time that Macromedia was developing RTMP, a first draft for another protocol was being submitted. RTSP was developed under a partnership between RealNetworks, Netscape, and Columbia University, offering something that was truly innovative at the time; VCR-like capabilities enabling users to control the viewing experience with the ability to play, pause, and rewind media streams.

Netscape’s official 1998 Standards Track memo for RTSP defines the protocol as “an application-level protocol for control over the delivery of data with real-time properties”.

The memo continues:

“RTSP provides an extensible framework to enable controlled, on-demand delivery of real-time data, such as audio and video. Sources of data can include both live data feeds and stored clips. This protocol is intended to control multiple data delivery sessions, provide a means for choosing delivery channels such as UDP, multicast UDP and TCP, and provide a means for choosing delivery mechanisms based upon RTP”.

After being published in 1998, RTSP quickly became the leading protocol for audio and video streaming. And though it has since been eclipsed by HTTP-based technologies, it remains the protocol of choice for the surveillance and video conferencing sectors due to its compatibility across many devices.

RTMP and RTSP were leading protocols of their time but have been largely replaced by HTTP-based and adaptive bitrate streaming technologies which are easier to scale to the demands of large broadcasts.

HTTP stands for Hyper Text Transfer Protocol. HTTP is a method for encoding and transferring information between a client and a web server and is the principal protocol for the transmission of information over the internet.

HTTP streaming involves push-style data transfer where a web server continuously sends data to a client over an open HTTP connection.

HTTP 1.0 was first introduced in 1996, with updated versions brought out in 1997, 2015, and 2022.

First created in 2002, adaptive bitrate streaming (ABS) is a technology for improving HTTP streaming.

ABS dynamically adjusts the compression level and video quality of streams to match bandwidth availability so that streams can be delivered efficiently and at the highest usable quality.

At the turn of the millennium live streaming and VoD started gaining even more traction. This was the decade that some of the biggest names in the industry were born. YouTube hit the scene in 2005, followed by Twitch (then justin.tv) in 2006, and Netflix in 2007. As access to the internet and mobile technology widened, internet video streaming hit the masses.

In 2005, Adobe acquired Macromedia and Adobe Flash fast became a mainstay of video streaming. But in 2010, three years after the iconic release of the first iPhone, Steve Jobs announced that Apple would cease to support the platform.

In his open letter, Thoughts on Flash, Jobs writes:

“I wanted to jot down some of our thoughts on Adobe’s Flash products so that customers and critics may better understand why we do not allow Flash on iPhones, iPods and iPads. Adobe has characterized our decision as being primarily business driven – they say we want to protect our App Store – but in reality it is based on technology issues. Adobe claims that we are a closed system, and that Flash is open, but in fact the opposite is true.”

Needless to say, Apple had been working on its own proprietary format. HLS.

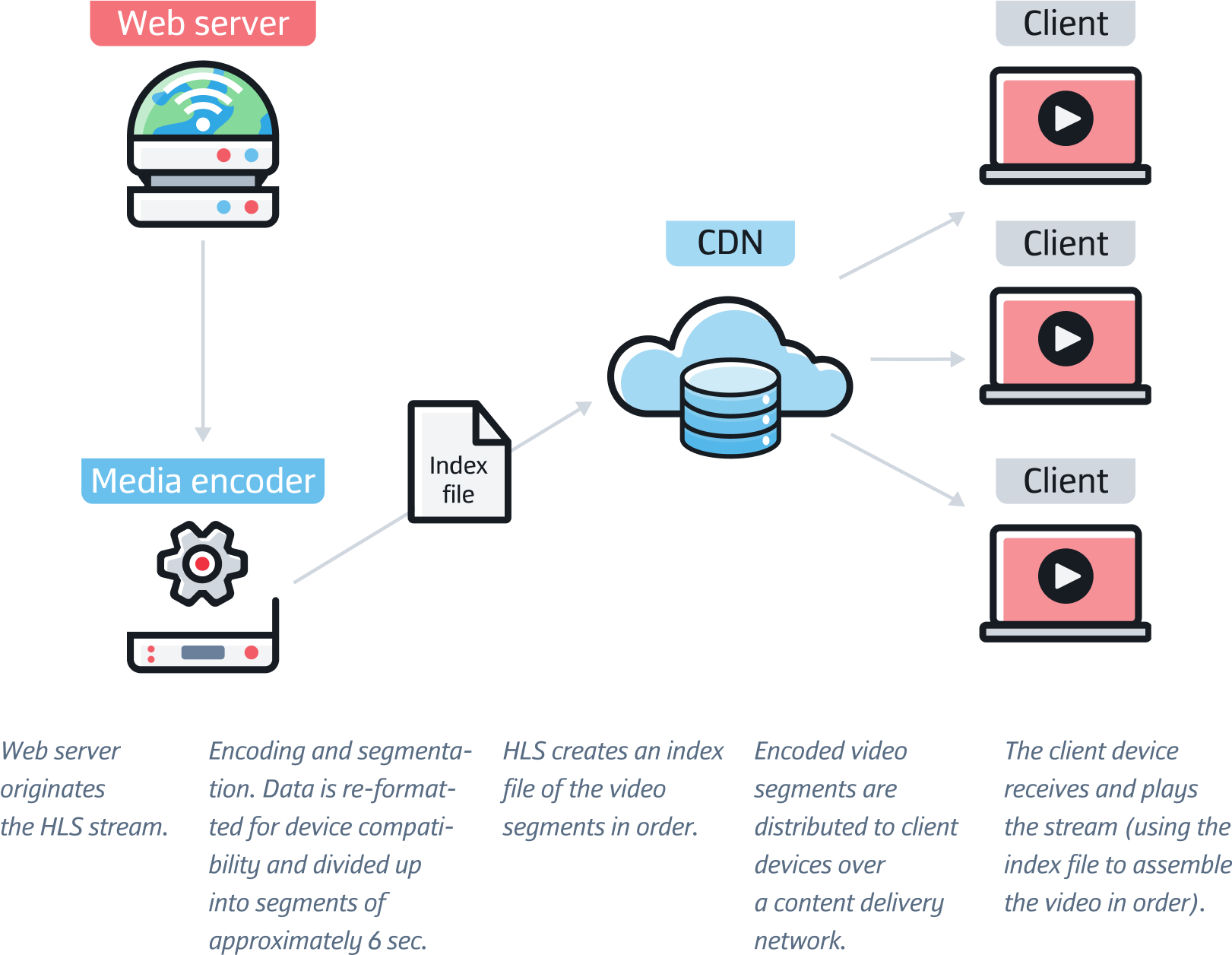

HLS is an adaptive HTTP - based streaming protocol that was first released in 2009. HLS breaks video and audio data into chunks which are compressed and transmitted over HTTP to the viewer’s device. The protocol was initially only supported by iOS but has since become widely supported across different browsers and devices. When Adobe Flash was phased out in 2020, HLS became the streaming protocol of choice.

HLS is still going strong today, with new platforms adopting the protocol. In January 2023, rlaxx (an advertising-based video on demand platform) partnered with Wurl (a connected TV solutions provider), marking the first integration of HLS on rlaxx TV.

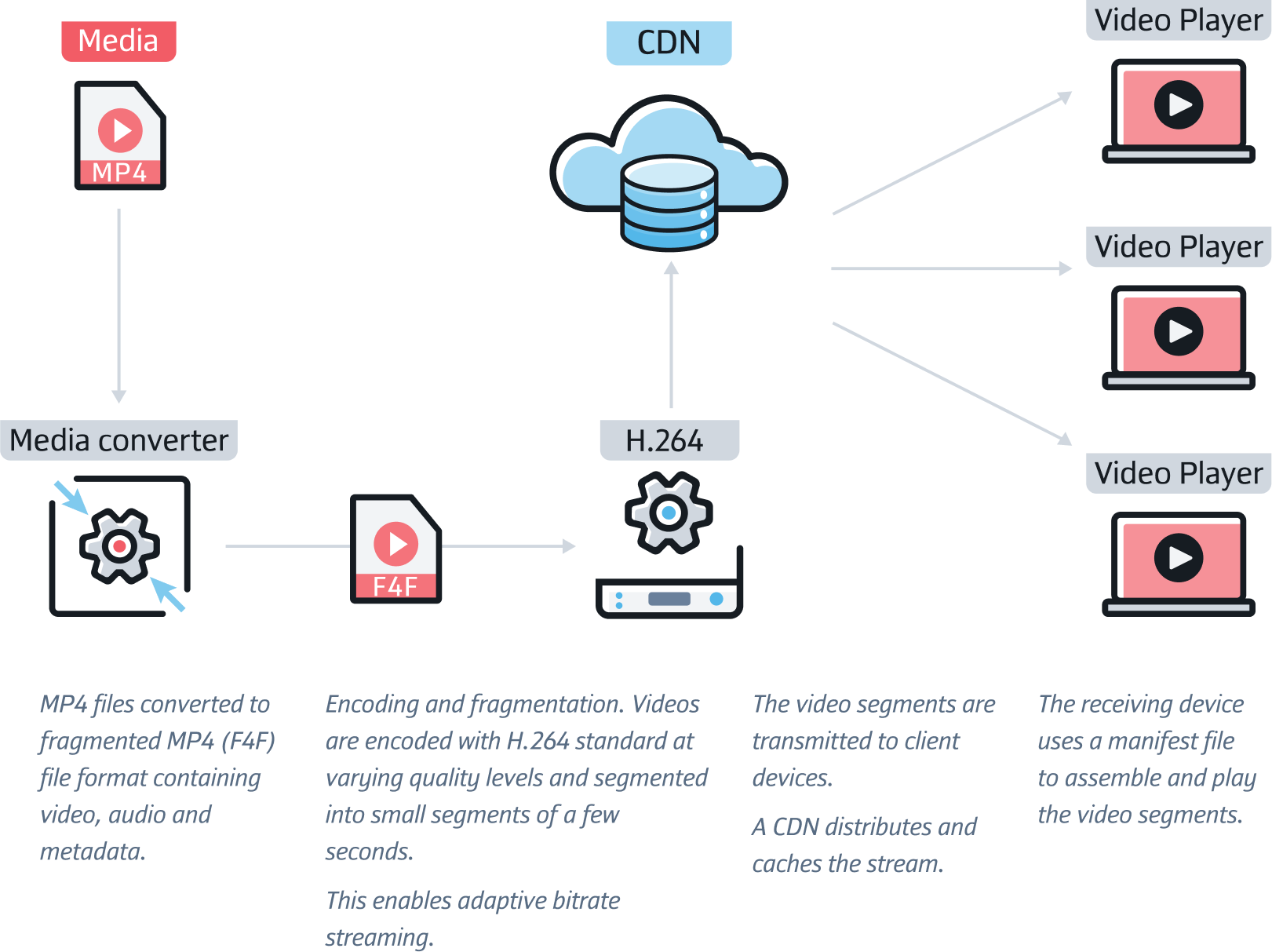

Adobe was also working on its own HTTP-based protocol. HDS, also developed in 2009, is a type of adaptive bitrate streaming protocol.

HDS delivers MP4 content over HTTP for live or on-demand streaming. The protocol was developed for use with Adobe products like Flash Player and Air and is not supported by Apple devices. The adoption of the HDS protocol has been less widespread than HLS and, after Flash was discontinued and the number of people with HDS compatible devices declined, the protocol fell out of mainstream use.

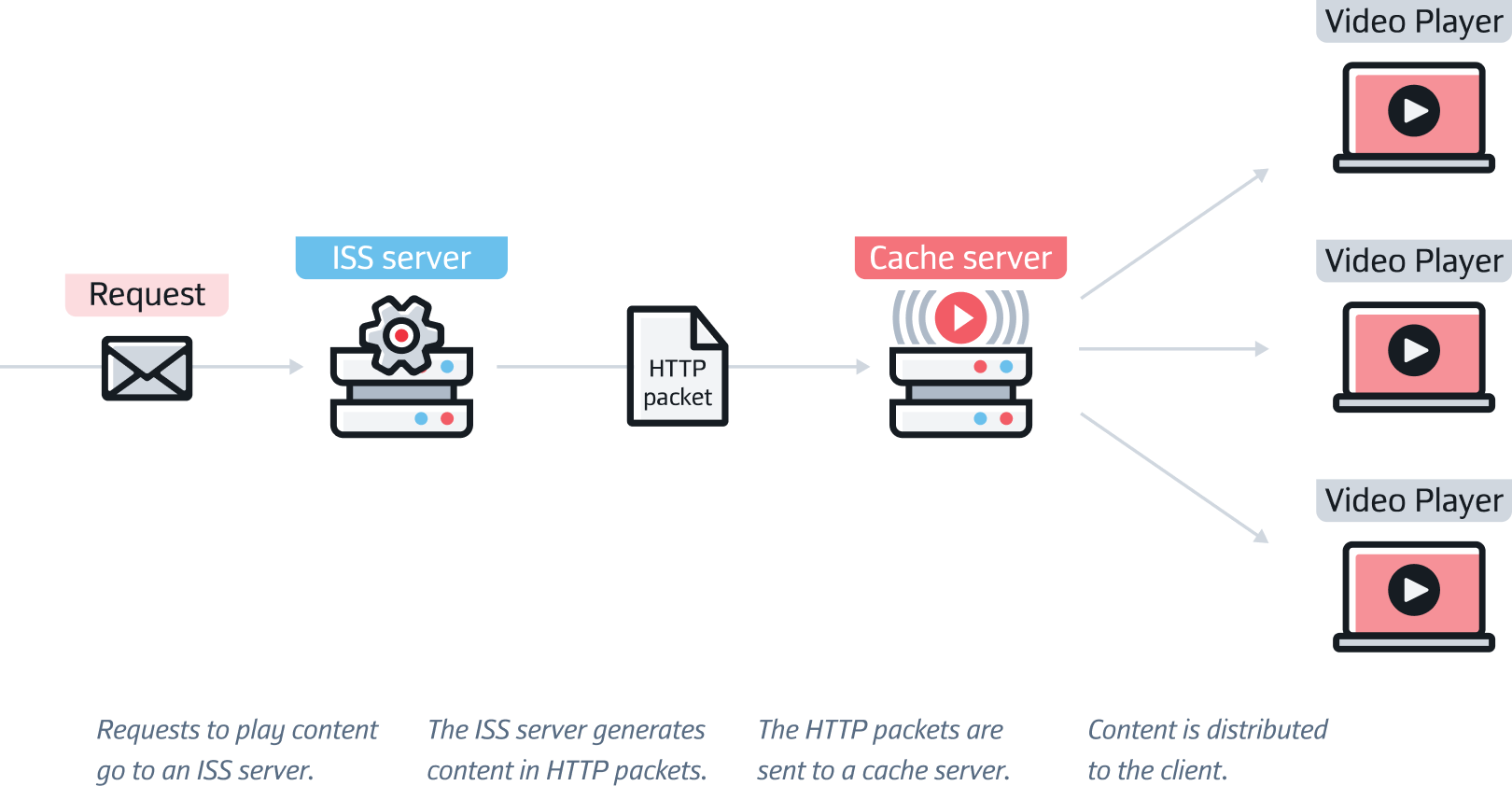

While the Apple-Adobe debacle raged, another protocol was entering the fray. In October of 2008, Microsoft announced that a new HTTP-based adaptive streaming extension would be added to its Internet Information Server (ISS). MSS facilitated the targeted delivery of HD content by detecting bandwidth conditions, CPU utilization, and playback window resolution.

Microsoft put its new streaming technology to the test at a series of major sporting events including the 2008 Beijing Olympics, 2009 Wimbledon Championship, and 2010 Vancouver Winter Olympics, during which NBC sports used the technology to deliver streams to 15.8 million users - a testament to the merits of HTTP streaming for large-scale live event streaming.

It was in the 2010s that live-streaming finally had its moment of glory. Video consumption on mobile devices was up and the appetite for live video started to grow exponentially.

In 2011 Twitch as we know it today was founded. In October 2012, a YouTube live of Felix Baumgartner’s skydive amounted 8 million simultaneous views. By March 2015, Periscope (a dedicated video streaming app) launched, allowing users to share live videos directly from their smartphones. And by 2016, Facebook Live was rolled out for all users.

Alongside the growth of live streaming, this was the decade when on-demand streaming services grew to prominence. With even more kids on the block, it became clear that the battle between proprietary streaming protocols had to be resolved. In 2010, a group of major streaming and media companies including Microsoft, Netflix, Google, and Adobe, got together to create an industry streaming standard. And by 2012, MPEG-DASH was born.

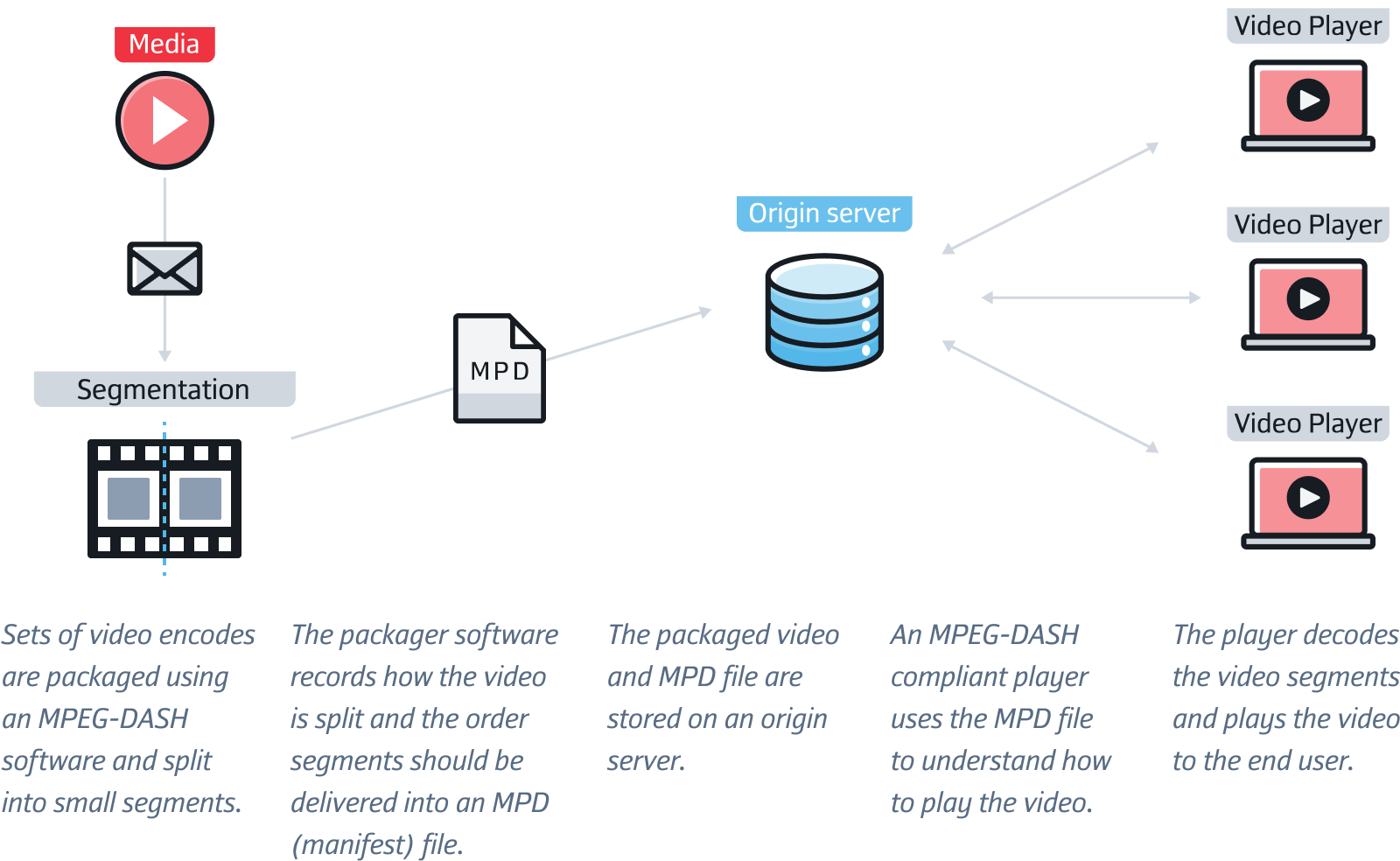

MPEG-DASH is a type of adaptive bitrate streaming that enables the streaming of media content delivered from HTTP web servers. It was designed by the Moving Pictures Expert Group as an alternative to proprietary streaming protocols like HLS. The aim was to create an industry standard for adaptive streaming.

From a technical standpoint, the protocol works in a similar way to HLS (by segmenting content into small chunks and decoding the data for playback). The key difference is that MPEG-DASH is not proprietary, meaning it operates as an open-source standard. The protocol is also codec agnostic (unlike HLS which specifies the use of certain codecs).

MPD stands for Media Presentation Description. An MPD file contains the metadata used by the DASH Client to (a) create the HTTP-URLs needed to access Segments, and (b) deliver the streaming service to the end-user.

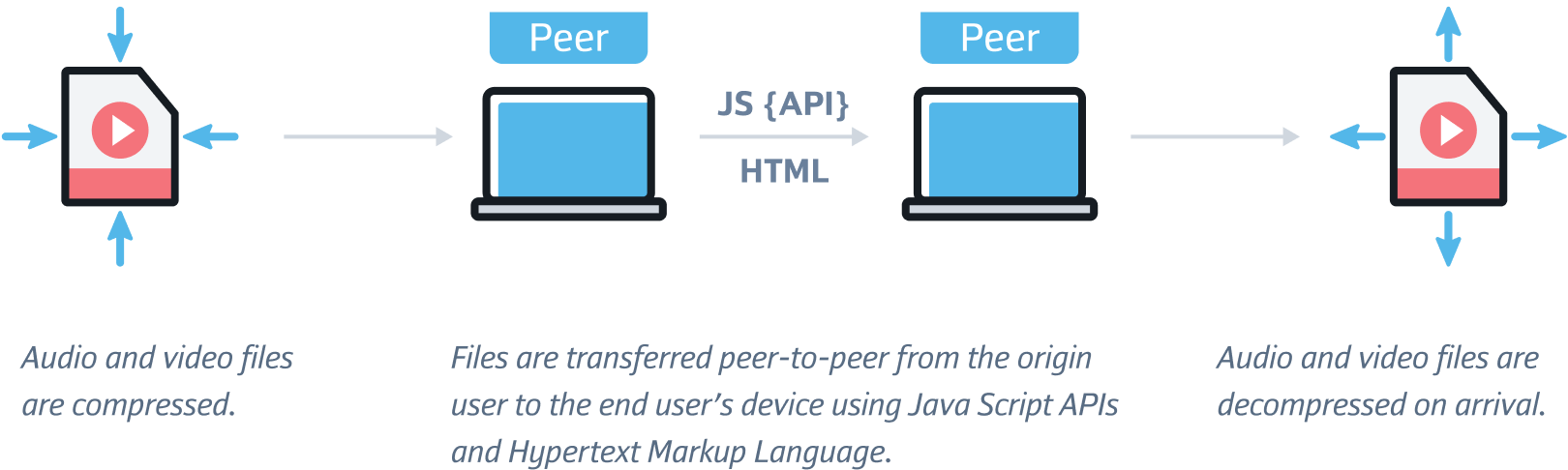

After Google acquired Global IP Solutions in 2010, the company developed WebRTC, an open-source project for browser-real-time communications. The first version of WebRTC was created by Ericsson Labs in 2011.

The framework was designed to facilitate real-time peer-to-peer communication between browsers enabling voice, video, and data to be shared. However, even though WebRTC was designed as a one-to-one communication solution, many streaming platforms are now trying to achieve one-to-many broadcasts using the protocol.

The WebRTC framework was foundational in advancing real-time voice, text, and video communications. Prior to its creation, platforms like Skype and Google Hangouts relied on native applications and plugins.

WebRTC implemented an open source, plugin-free framework for in-browser communication consisting of a JavaScript API and a suite of communication protocols. The protocol enabled developers to facilitate real-time communication within browser-based software, removing the need for intermediary servers.

And although adoption was slow to start (Microsoft didn’t begin supporting WebRTC until 2015 and Apple held off until 2017), it has since become one of the most widely used streaming protocols for high-quality online communications.

Today the protocol is used by tech giants like Google, Microsoft, and Apple, and WebRTC technology is employed by many popular communication applications (WhatsApp, Discord, Google Chat, etc.). A testament to its success, the global WebRTC Solutions market is forecast to reach a valuation of $46,336.6 million by 2027.

In April 2022, Comrex debuted a new remote contribution software - Gagl, at the National Association of Broadcasters (NAB) in Las Vegas. Gagl is a cloud service delivering high-quality conferenced audio from multiple contributors. The software uses WebRTC technology, allowing up to five users to send and receive audio from computers and smartphones by clicking on a link using a common browser.

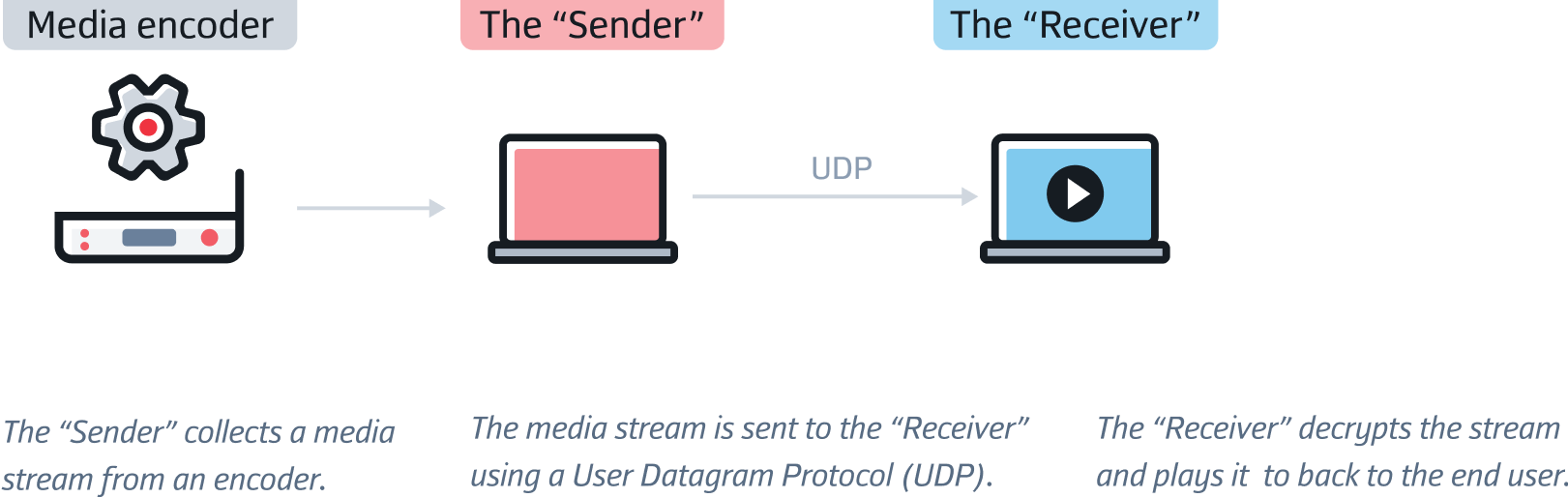

SRT is an open-source video transport protocol designed to deliver low latency streams over public and private networks and to optimize streaming over unpredictable networks.

Instead of sending data packets over the public internet using Transmission Control Protocol (TCP), SRT uses a packet-based, “connectionless” protocol called User Datagram Protocol (UDP). UDP doesn’t need to establish a network connection before sending data, making it faster than TCP.

|

Transmission Control Protocol |

User Datagram Protocol |

|

Connection-based protocol |

Connectionless protocol |

The SRT protocol maintains video quality in spite of bandwidth fluctuation and packet loss and helps to keep streams secure by using 128/356-bit AES encryption to ensure that content is protected end-to-end from unauthorized parties.

The protocol was designed by Haivision in 2012 as an alternative to RTMP and was first demoed at the International Broadcasting Convention in 2013. In 2017, SRT was released on GitHub as an open source protocol and that same year Haivision connected with the streaming engine, Wowza, to create the SRT Alliance, a community of industry leaders working towards the continuous improvement of SRT.

In March 2023, Haivision announced that YouTube would be joining the SRT Alliance. The company believes that YouTube’s support marks an important milestone for the protocol and could be the catalyst for more widespread adoption. “Seeing the adoption of our SRT technology by YouTube and other industry leaders has shown how ubiquitous the protocol has become for low latency end-to-end video transport,” comments Mirko Wicha, Chairman and CEO of Haivision. He continues: “With YouTube now a member of the SRT Alliance, some of the most influential global media and entertainment organisations are now leveraging SRT in their workflows”.

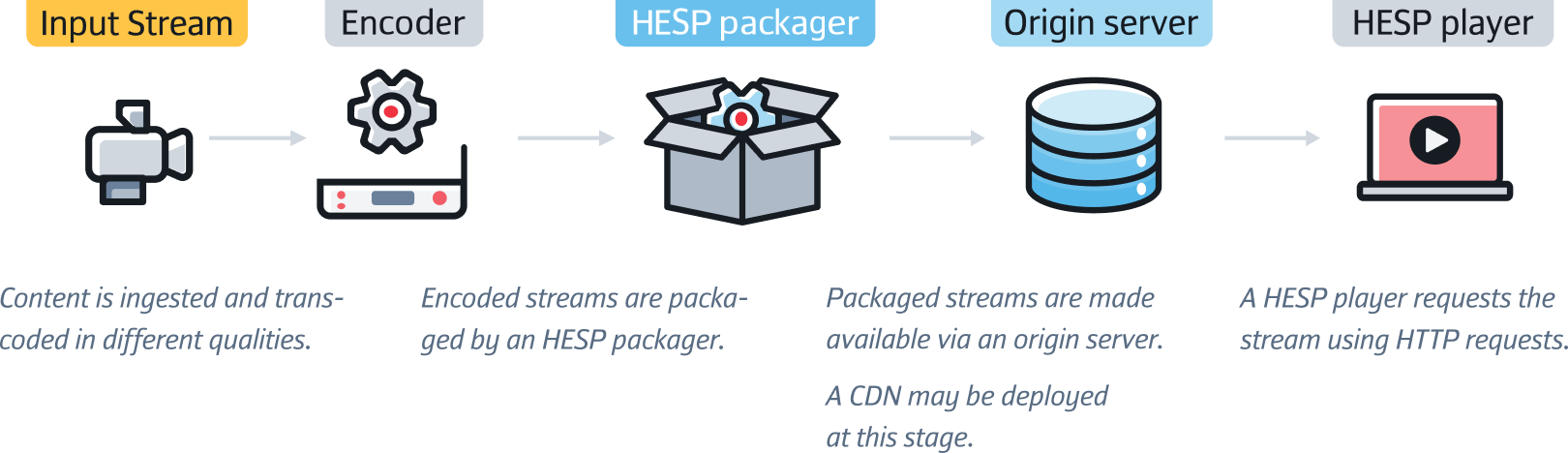

HESP is an adaptive HTTP based video streaming protocol developed by THEO Technologies. The innovative protocol was first introduced at the 2019 National Association of Broadcasters (NAB). Achieving low latency, scalability, and best quality viewer experiences had traditionally been challenging for streaming platforms (typically achieving one had come at the expense of another). But HESP offered a way for streaming platforms to achieve all three, even over standard HTTP infrastructure and content delivery networks (CDNs).

Instead of a segment-based approach, HESP uses a frame-based streaming approach. This involves using two different streams, an initialization stream containing key frames (used when a new stream is started) and a continuation stream (an encoded stream which continues playback after each initialization stream image). Using two streams makes it possible to start playback at the most recent position in the video stream. And because the initialization stream’s images are all key frames, viewers can instantaneously start streams.

In 2020, THEO Technologies partnered with Synamedia to form the HESP Alliance, with the intention of accelerating the adoption and wider standardization of HESP.

“The HESP protocol developed by our team establishes a new baseline for video delivery. This will help video service providers to boost engagement with unparalleled viewer experiences stemming from improvements in latency reduction, faster zapping times, and scalability,” comments Steven Tielemans, CEO at THEO Technologies

Since 2020, streaming has become firmly embedded as a mainstay of modern life. On-demand streaming services have gone from novelty to an entertainment necessity for most, live streaming for work and education has become the norm, and the video game streaming market is thriving. Streaming has well and truly made it to the mainstream and new use cases such as digital fitness, live commerce, and telehealth are taking the world by storm.

Exercise in the home is a growing trend with live and on-demand fitness platforms like Les Mills, ClassPass, and Wellbeats making it easier to work out from home.

The global virtual fitness market size was valued at $16.4 billion in 2022 with the on-demand market (pre-recorded videos) segment accounting for the largest market share.

Livestream e-commerce involves the promotion and selling of products and services in real time on digital platforms such as TikTok.

Asia Pacific is a strong market in this sector and it is expected that live and social commerce in China will outperform traditional commerce by the end of 2023.

Academics at Soongsil University in Seoul, Korea are now proposing the incorporation of the metaverse within live commerce using digital twin technology (a digital twin is a digitized representation of a physical object or environment).

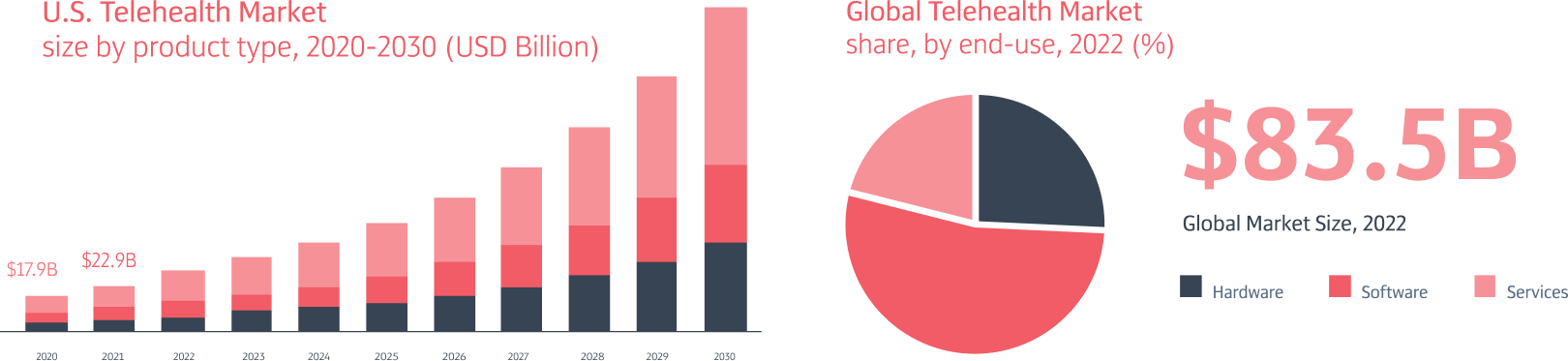

Technology is meeting healthcare head on and more people are choosing to access healthcare services remotely via virtual check-ups using their computers, tablets, or smartphones.

The global telehealth market size was estimated at $83.5 billion in 2022 and is expected to reach $101.15 billion by the end of 2023.

At present, web-based delivery dominates the market with an overall revenue share of 45.7% as of 2022.

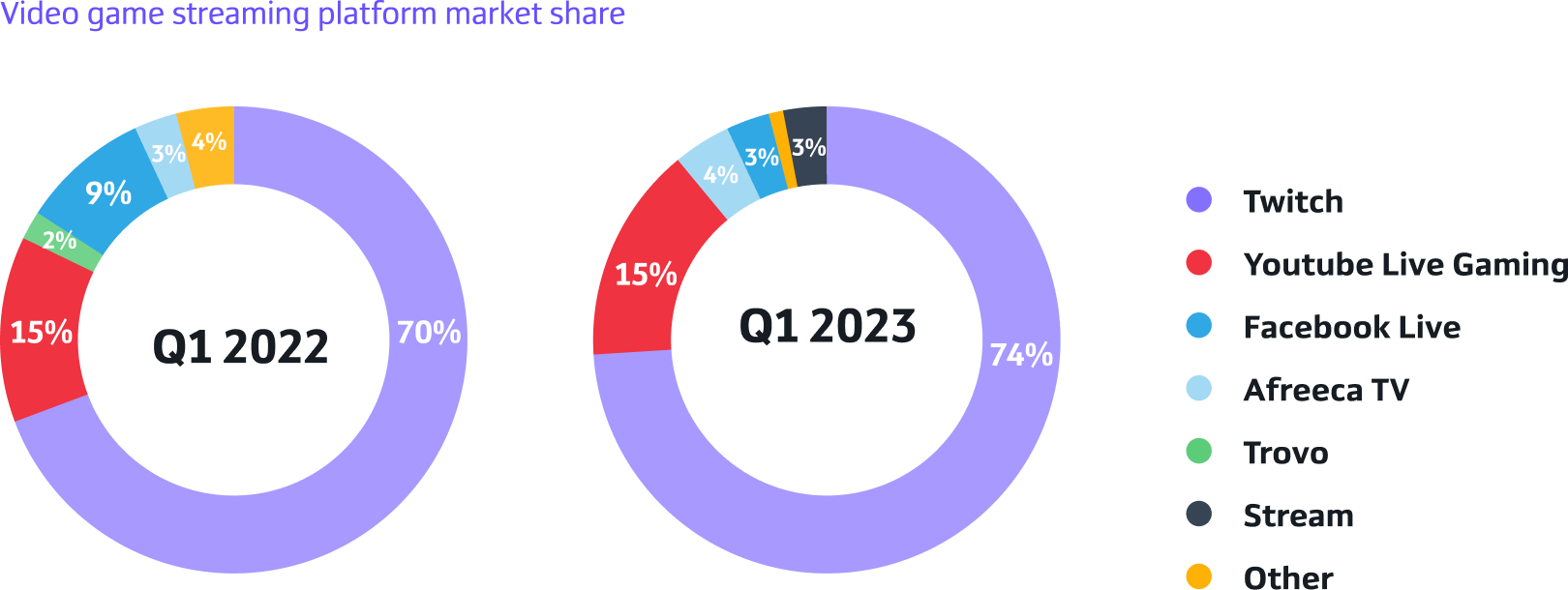

Fueled by increased adoption of video streaming apps on mobile devices, game streaming platforms are going strong.

Twitch remains the largest platform. Twitch amassed a combined 5.71 billion hours watched on its platform worldwide in the third quarter of 2022.

40% of surveyed video game development professionals worldwide now believe that streaming will grow the most out of any gaming platform by 2025.

In January 2021, Flash was officially discontinued after steadily depreciating usage amongst companies like YouTube, Netflix, and Facebook. Since then, broadcasters and content creators have turned to streaming protocols to share their content. The development of new streaming protocols has continued since the development of HESP in 2019 to meet the changing demands of today’s on-demand and live streaming platforms.

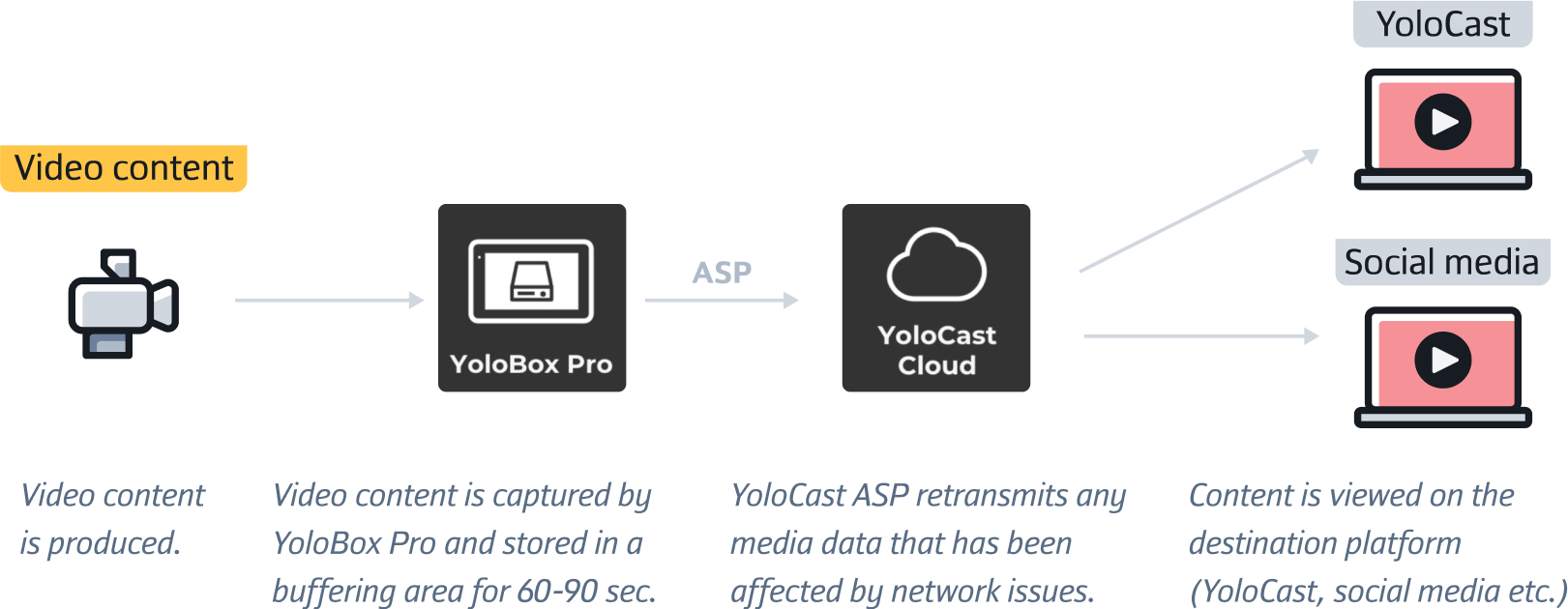

On 25 December 2022, the live streaming and online video hosting platform, YoloLiv, released ASP. ASP is a smart streaming protocol that sends stream data to its own CDN (called YoloCast). ASP stores and transmits more redundant data than other streaming protocols like RTMP thereby reducing loss of quality even under unstable internet connection conditions. At the time of writing, ASP is in beta and works exclusively with YoloBox Pro (YoloLiv’s all-in-one video switcher, encoder, streamer, monitor, recorder, and audio mixer).

According to YoloLiv, the protocol guarantees “complete and error-free content delivery”. This is possible because video content is stored in a special buffering area within YoloBox Pro for 60-90 seconds. YoloCast ASP then retransmits any media data that is out of order due to network issues so that network disruptions aren’t noticeable by audiences.

ASP isn’t the only new protocol to have hit the scene recently. Adaptive Reliable Transport (ART) and MRV2 are two new streaming protocols currently in their infancy.

In 2022 a new streaming protocol called ART was launched. The protocol was developed jointly by Teradek and Amimon and supports real-time and bi-directional streaming between two ART systems over LAN or WAN. The protocol also supports:

Point-to-point streaming between ART encoders and decoders

Multicast over LAN

Distribution from a single ART encoder to multiple ART decoders

One of the most innovative features of ART is that it optimizes for video quality and network characteristics simultaneously. According to Teradek, this enables “lifelike bi-directional streaming interaction between anchors and guests”.

“ART is without a doubt the most impressive video transport protocol available today [and] no other protocol can deliver broadcast quality, real-time performance over WAN like it” comments Michael Gailing, General Manager of Live Production at Teradek.

One of the latest protocols to hit the scene is MRV2. The digital audio technology and advertising company Triton Digital developed the MRV2 protocol as a proprietary streaming transport protocol for use between the station’s master control room and CDN. The objective is to make ad stitching (server-side ad insertion) more accurate and maintain high-quality listening experiences. In April 2023, Triton Digital announced that Wheatstone would be adding the MRV2 protocol to its streaming products and software.

In 2022, TheoPlayer announced the release of HESP.live. HESP.live is the first HTTP-based real-time video streaming infrastructure capable of achieving sub-second latency at scale. It uses TheoPlayer’s HESP protocol to enable live interactivity and, at the time of writing, it is the fastest HTTP-based video live streaming solution.

“The real game changer here is not only the sub-second latency, but also the unprecedented scaling abilities to reach your entire audience,” comments Steven Tielemans, CEO of THEO Technologies for the press release.

Streaming protocols have been changing the way we consume video content for more than a decade now. With the rise of streaming services, online video consumption has surpassed traditional broadcast television. But the evolution of streaming protocols never slowed down.

As demand for seamless content delivery mounts, and streaming platforms diversify into new markets, the streaming industry (and the technology that drives it) will need to prioritize continuous innovation.

Driving down latency will remain a priority. We’ve already seen streaming protocols start to work towards latency reduction. HTTP streaming protocols like HLS and DASH, for example, have each brought out low latency versions of their protocols: Low-Latency HLS (LL-HLS) and Low-Latency DASH (LL-DASH), respectively.

Streaming protocols will also need to strive towards higher levels of interoperability, improved video delivery, and the ability to serve a landscape increasingly dominated by ubiquitous streaming across interconnected devices.

At the time of writing, connectivity and interactive broadcasting are the trends to watch. Over-the-top (OTT) content delivery has been steadily dominating the market and is expected to reach a valuation of $194.20 billion by 2025, marking a 13.87% CAGR between 2019 and 2025.

But, as Erik Otto, Chief Executive of Compliance at Mediproxy writes, this isn’t straightforward:

“The inherent challenge in this for broadcasters and service providers is to cope with many variations of current streaming protocols and technical standards.”

One thing’s for sure. Streaming protocols have come a long way since the 1990s. As technology continues to improve, we can expect to see the development of new streaming protocols and even faster streaming experiences.

The race to achieve the lowest levels of latency at the highest scale is well and truly on.